Believe it or not, people used film for taking pictures with microscopes – big, clunky cameras bolted to the microscopes, often with separate control units, and no way to preview the picture. Just push the shutter release, cross your fingers and hope for the best – of course you wouldn’t know until you actually developed the film. Fast forward a few decades and now cameras are ubiquitous – in our pockets, on our mobile phones and devices, even in our doorbells, all ready to capture the moment at a moment’s notice. However, microscopy cameras are, indeed, different than that ubiquitous smart phone camera. So why isn’t color imaging in microscopy as simple as a selfie?

Just as with cellphones, there are a wide variety of options for color cameras for microscopes. So how do you know which camera is best for you? Take a closer look at the technology.

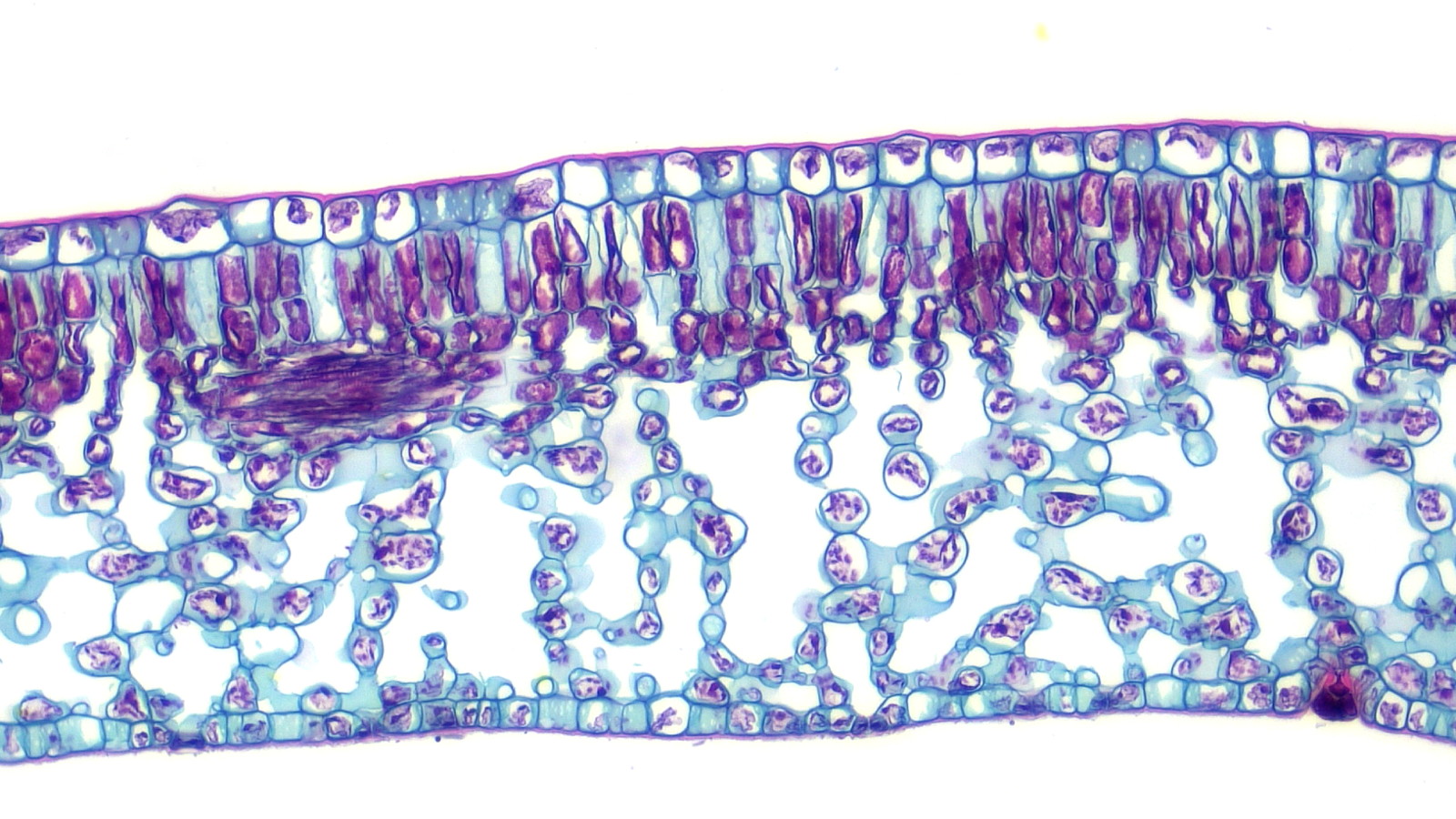

Color technology

The most common technology to deliver a color image is a camera featuring a Bayer color mosaic filter (Fig. 1) – a red, green or blue filter on each pixel. Note that with this most common filter arrangement, a given pixel only “sees” one color — this means that the resolution of a full-color image (in megapixels) is only 25% of the total megapixels available on that camera. A Bayer filter is the same technology used in most professional and consumer cameras, and the cameras in our mobile phones. It is much more cost effective than 3-chip cameras (different sensor for red, green and blue) or cameras that use liquid filter-based color filters (take sequential images of red, green and blue, then overlay them). Of course, your application may dictate which technology is most appropriate (i.e. speed, resolution, flexibility, simplicity).

CCD or CMOS?

These acronyms refer to the technology used in the camera sensor. Traditionally, CCD sensors delivered better quality images (i.e. less noise), and CMOS were cheaper and faster. Newer CMOS technologies have greatly improved the image quality, and they’re still king when it comes to speed. Even more recently, sensor manufacturers are concentrating their focus on CMOS technology, and the availability of CCD sensors is declining.

The turtle and the hare

If you are only imaging fixed slides or dead/inanimate objects, then there’s no need for a lightning fast camera – the specimen isn’t going anywhere, literally. So, go for the best quality image possible, regardless of how fast or slow the camera. On the other hand, if you are imaging living systems (i.e. live microorganisms) or a specimen in motion, then a faster “shutter speed” translates into better snapshots in time. For live image streaming, displaying in front of an audience, or video capture, higher speeds are also preferred or necessary (i.e. 30 frames per second or faster is recommended), otherwise the audience could get motion sick.

Cameras with HDMI output generally provide faster frame rates for live preview, and often save the images to an internal storage device. USB-output cameras used to have slower frame rates than their HDMI siblings, but the newer USB 3.0 cameras can stream at well over 60fps. USB cameras save directly to a PC while offering greater control of camera settings for image acquisition through software. The latest arrivals are WiFi-connected cameras that take advantage of our mobile devices and a camera app for image acquisition. Thanks to the latest wireless technologies, their frame rates are usually somewhere between those of HDMI and USB-output cameras.

More is better, right?

As general consumers of imaging technologies (first point-and-shoot cameras, now smart phones), we’re tricked into thinking “more” megapixels translate into better images. NOT TRUE, at least not for microscopy! Given the same size of the sensor, more pixels (or the little light-sensing component of a camera sensor) means smaller pixels which, in turn, means less “volume” in the pixel to sense light, thus reducing sensitivity. Also due to the design of the technologies, there is a little more space between pixels in a CMOS sensor than a CCD sensor, therefore CMOS pixels tend to be smaller than those on a CCD.

And when it comes to sensitivity for lower light applications, [pixel] size does matter. As I alluded to above, smaller pixels are less sensitive than larger pixels. So for situations where sensitivity is important (i.e. fluorescence, darkfield, phase contrast), larger pixels (and consequently lower megapixel cameras) are actually preferred. There is also an ideal pixel size for each microscope magnification, and this is determined based on the resolution of the microscope (please refer to the article “What’s the Deal with Megapixels?” Suffice it to say that the higher the magnification, the larger the ideal pixel dimensions and, consequently, the fewer the pixels that fit on the sensor.

So, which camera is best? That’s for you to decide (and our technical applications people can help). Review the software and, of course, the image quality. Consider how you will use the images (still images or live viewing), and the camera’s connectivity (HDMI, USB or WiFi). One last word of advice: take the camera for a test drive.

Thanks for reading!